A

Word

Description

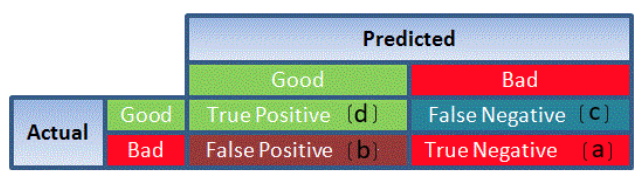

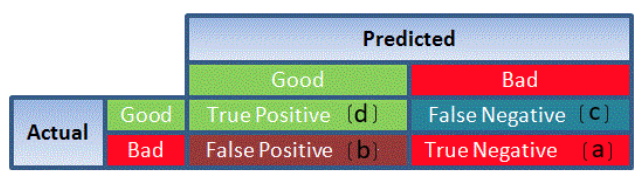

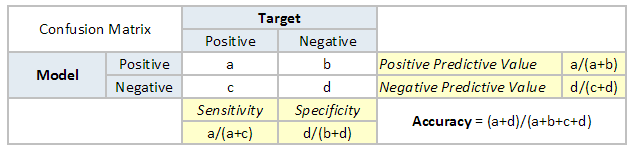

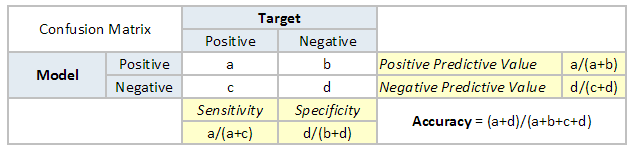

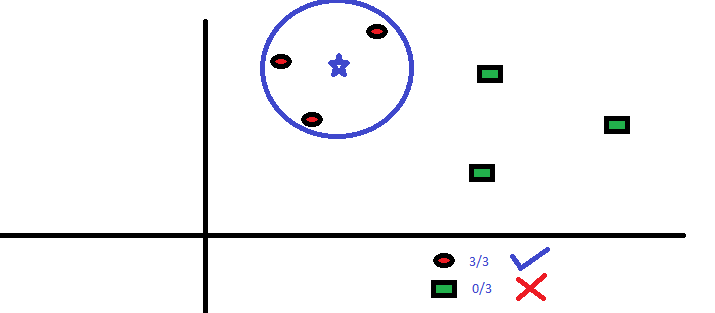

Accuracy Accuracy is a metric by which one can examine how good is the machine learning model. Let us look at the confusion matrix to understand it in a better way:

So, the accuracy is the ratio of correctly predicted classes to the total classes predicted. Here, the accuracy will be:

So, the accuracy is the ratio of correctly predicted classes to the total classes predicted. Here, the accuracy will be:

Adam Optimization The Adam Optimization algorithm is used in training deep learning models. It is an extension to Stochastic Gradient Descent. In this optimization algorithm, running averages of both the gradients and the second moments of the gradients are used. It is used to compute adaptive learning rates for each parameter.

Features:

- It is computationally efficient and has little memory requirements

- It is invariant to diagonal rescaling of the gradients

- Adam works well in practice as compared to other stochastic optimization methods

Apache Spark Apache Spark is an open-source cluster computing framework. Spark can be deployed in a variety of ways, provides native bindings for the Java, Scala, Python, and R programming languages, and supports SQL, streaming data, and machine learning. Some of the key features of Apache Spark are listed below:

- Speed − Spark helps to run an application in Hadoop cluster, up to 100 times faster in memory, and 10 times faster when running on disk

- Spark supports popular data science programming languages such as R, Python, and Scala

- Spark also has a library called MLlIB which includes basic machine learning including classification, regression, and clustering

Autoregression Autoregression is a time series model that uses observations from previous time steps as input to a regression equation to predict the value at the next time step. The autoregressive model specifies that the output variable depends linearly on its own previous values. In this technique input variables are taken as observations at previous time steps, called lag variables.

For example, we can predict the value for the next time step (t+1) given the observations at the last two time steps (t-1 and t-2). As a regression model, this would look as follows:

X(t+1) = b0 + b1*X(t-1) + b2*X(t-2)

Since the regression model uses data from the same input variable at previous time steps, it is referred to as an autoregression.

| Accuracy | Accuracy is a metric by which one can examine how good is the machine learning model. Let us look at the confusion matrix to understand it in a better way:

So, the accuracy is the ratio of correctly predicted classes to the total classes predicted. Here, the accuracy will be:

|

| Adam Optimization | The Adam Optimization algorithm is used in training deep learning models. It is an extension to Stochastic Gradient Descent. In this optimization algorithm, running averages of both the gradients and the second moments of the gradients are used. It is used to compute adaptive learning rates for each parameter.

Features:

|

| Apache Spark | Apache Spark is an open-source cluster computing framework. Spark can be deployed in a variety of ways, provides native bindings for the Java, Scala, Python, and R programming languages, and supports SQL, streaming data, and machine learning. Some of the key features of Apache Spark are listed below:

|

| Autoregression | Autoregression is a time series model that uses observations from previous time steps as input to a regression equation to predict the value at the next time step. The autoregressive model specifies that the output variable depends linearly on its own previous values. In this technique input variables are taken as observations at previous time steps, called lag variables.

For example, we can predict the value for the next time step (t+1) given the observations at the last two time steps (t-1 and t-2). As a regression model, this would look as follows:

X(t+1) = b0 + b1*X(t-1) + b2*X(t-2)

Since the regression model uses data from the same input variable at previous time steps, it is referred to as an autoregression.

|

B

Word

Description

Backpropogation In neural networks, if the estimated output is far away from the actual output (high error), we update the biases and weights based on the error. This weight and bias updating process is known as Back Propagation. Back-propagation (BP) algorithms work by determining the loss (or error) at the output and then propagating it back into the network. The weights are updated to minimize the error resulting from each neuron. The first step in minimizing the error is to determine the gradient (Derivatives) of each node w.r.t. the final output. Bagging Bagging or bootstrap averaging is a technique where multiple models are created on the subset of data, and the final predictions are determined by combining the predictions of all the models. Some of the algorithms that use bagging technique are :

- Bagging meta-estimator

- Random Forest

Bar Chart Bar charts are a type of graph that are used to display and compare the numbers, frequency or other measures (e.g. mean) for different discrete categories of data. They are used for categorical variables. Simple example of a bar chart:

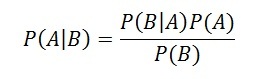

Bayes Theorem

Bayes’ theorem is used to calculate the conditional probability. Conditional probability is the probability of an event ‘B’ occurring given the related event ‘A’ has already occurred.

For example, Let’s say a clinic wants to cure cancer of the patients visiting the clinic.

A represents an event “Person has cancer”

B represents an event “Person is a smoker”

The clinic wishes to calculate the proportion of smokers from the ones diagnosed with cancer.

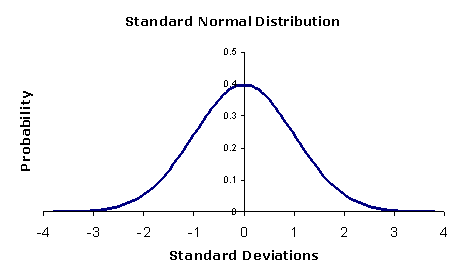

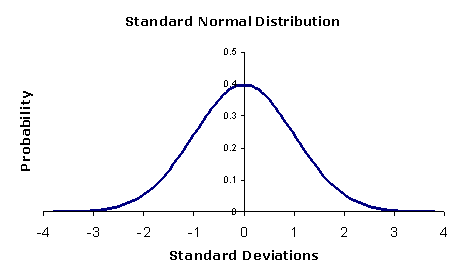

Bayesian Statistics Bayesian statistics is a mathematical procedure that applies probabilities to statistical problems. It provides people the tools to update their beliefs in the evidence of new data. It differs from classical frequentist approach and is based on the use of Bayesian probabilities to summarize evidence. Bias-Variance Trade-off The error emerging from any model can be broken down into components mathematically.

Following are these component :

- Bias error is useful to quantify how much on an average are the predicted values different from the actual value

- Variance on the other side quantifies how are the prediction made on same observation different from each other

A high bias error means we have a under-performing model which keeps on missing important trends. A high variance model will over-fit on your training population and perform badly on any observation beyond training. In order to have a perfect fit in the model, the bias and variance should be balanced which is bias variance trade off.

A high bias error means we have a under-performing model which keeps on missing important trends. A high variance model will over-fit on your training population and perform badly on any observation beyond training. In order to have a perfect fit in the model, the bias and variance should be balanced which is bias variance trade off.

Big Data Big data is a term that describes the large volume of data – both structured and unstructured. But it’s not the amount of data that’s important. It’s how organizations use this large amount of data to generate insights. Companies use various tools, techniques and resources to make sense of this data to derive effective business strategies. Binary Variable Binary variables are those variables which can have only two unique values. For example, a variable “Smoking Habit” can contain only two values like “Yes” and “No”. Binomial Distribution

Binomial Distribution is applied only on discrete random variables. It is a method of calculating probabilities for experiments having fixed number of trials.

Binomial distribution has following properties:

- The experiment should have finite number of trials

- There should be two outcomes in a trial: success and failure

- Trials are independent

- Probability of success (p) remains constant

For a distribution to qualifying as binomial, all of the properties must be satisfied.

So, which kind of distributions would be considered binomial? Let’s answer it using few examples:

- Suppose, you need to find the probability of scoring bull’s eye on a dart. Can it be called as binomial distribution? No, because the number of trials isn’t fixed. I could hit the bull’s eye on the 1st attempt or 3rd attempt or I might not be able to hit it at all. Therefore, trials aren’t fixed.

- A football match can have resulted in 3 ways: Win, Lose or Draw. Thus, if we are asked to find the probability of winning in this case, binomial distribution cannot be used because there are more than two outcomes.

- Tossing a fair coin 20 times is a case of binomial distribution as here we have finite number of trials 20 with only two outcomes “Head” or “Tail”. These trials are independent and probability of success is 1/2 across all trials.

The formula to calculate probability using Binomial Distribution is:

P ( X = r ) = nCr (pˆr)* (1-p) * (n-r)

where:

n : No. of trials

r : No. of success

p : the probability of success

1 – p : Probability of failure

nCr : binomial coefficient given by n!/k!(n-k)!

Boosting Boosting is a sequential process, where each subsequent model attempts to correct the errors of the previous model. The succeeding models are dependent on the previous model. Some of the boosting algorithms are:

- AdaBoost

- GBM

- XGBM

- LightGBM

- CatBoost

Bootstrapping Bootstrapping is the process of dividing the dataset into multiple subsets, with replacement. Each subset is of the same size of the dataset. These samples are called bootstrap samples. Box Plot It displays the full range of variation (from min to max), the likely range of variation (the Interquartile range), and a typical value (the median). Below is a visualization of a box plot:

Some of the inferences that can be made from a box plot:

Some of the inferences that can be made from a box plot:

- Median: Middle quartile marks the median.

- Middle box represents the 50% of the data

- First quartile: 25% of data falls below these line

- Third quartile: 75% of data falls below these line.

Business Analytics Business analytics is mainly used to show the practical methodology followed by an organization for exploring data to gain insights. The methodology focusses on statistical analysis of the data. Business Intelligence Business intelligence are a set of strategies, applications, data, technologies used by an organization for data collection, analysis and generating insights to derive strategic business opportunities.

| Backpropogation | In neural networks, if the estimated output is far away from the actual output (high error), we update the biases and weights based on the error. This weight and bias updating process is known as Back Propagation. Back-propagation (BP) algorithms work by determining the loss (or error) at the output and then propagating it back into the network. The weights are updated to minimize the error resulting from each neuron. The first step in minimizing the error is to determine the gradient (Derivatives) of each node w.r.t. the final output. |

| Bagging | Bagging or bootstrap averaging is a technique where multiple models are created on the subset of data, and the final predictions are determined by combining the predictions of all the models. Some of the algorithms that use bagging technique are :

|

| Bar Chart | Bar charts are a type of graph that are used to display and compare the numbers, frequency or other measures (e.g. mean) for different discrete categories of data. They are used for categorical variables. Simple example of a bar chart: |

| Bayes Theorem |

Bayes’ theorem is used to calculate the conditional probability. Conditional probability is the probability of an event ‘B’ occurring given the related event ‘A’ has already occurred.

For example, Let’s say a clinic wants to cure cancer of the patients visiting the clinic.

A represents an event “Person has cancer”

B represents an event “Person is a smoker”

The clinic wishes to calculate the proportion of smokers from the ones diagnosed with cancer.

|

| Bayesian Statistics | Bayesian statistics is a mathematical procedure that applies probabilities to statistical problems. It provides people the tools to update their beliefs in the evidence of new data. It differs from classical frequentist approach and is based on the use of Bayesian probabilities to summarize evidence. |

| Bias-Variance Trade-off | The error emerging from any model can be broken down into components mathematically.

Following are these component :

A high bias error means we have a under-performing model which keeps on missing important trends. A high variance model will over-fit on your training population and perform badly on any observation beyond training. In order to have a perfect fit in the model, the bias and variance should be balanced which is bias variance trade off.

|

| Big Data | Big data is a term that describes the large volume of data – both structured and unstructured. But it’s not the amount of data that’s important. It’s how organizations use this large amount of data to generate insights. Companies use various tools, techniques and resources to make sense of this data to derive effective business strategies. |

| Binary Variable | Binary variables are those variables which can have only two unique values. For example, a variable “Smoking Habit” can contain only two values like “Yes” and “No”. |

| Binomial Distribution |

Binomial Distribution is applied only on discrete random variables. It is a method of calculating probabilities for experiments having fixed number of trials.

Binomial distribution has following properties:

For a distribution to qualifying as binomial, all of the properties must be satisfied.

So, which kind of distributions would be considered binomial? Let’s answer it using few examples:

The formula to calculate probability using Binomial Distribution is:

P ( X = r ) = nCr (pˆr)* (1-p) * (n-r)

where:

n : No. of trials r : No. of success p : the probability of success 1 – p : Probability of failure nCr : binomial coefficient given by n!/k!(n-k)! |

| Boosting | Boosting is a sequential process, where each subsequent model attempts to correct the errors of the previous model. The succeeding models are dependent on the previous model. Some of the boosting algorithms are:

|

| Bootstrapping | Bootstrapping is the process of dividing the dataset into multiple subsets, with replacement. Each subset is of the same size of the dataset. These samples are called bootstrap samples. |

| Box Plot | It displays the full range of variation (from min to max), the likely range of variation (the Interquartile range), and a typical value (the median). Below is a visualization of a box plot:

Some of the inferences that can be made from a box plot:

|

| Business Analytics | Business analytics is mainly used to show the practical methodology followed by an organization for exploring data to gain insights. The methodology focusses on statistical analysis of the data. |

| Business Intelligence | Business intelligence are a set of strategies, applications, data, technologies used by an organization for data collection, analysis and generating insights to derive strategic business opportunities. |

C

Word

Description

Categorical Variable Categorical variables (or nominal variables) are those variables which have discrete qualitative values. For example, names of cities are categorical like Delhi, Mumbai, Kolkata. Classification It is supervised learning method where the output variable is a category, such as “Male” or “Female” or “Yes” and “No”.

For example: Classification Algorithms like Logistic Regression, Decision Tree, K-NN, SVM etc.

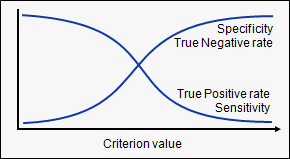

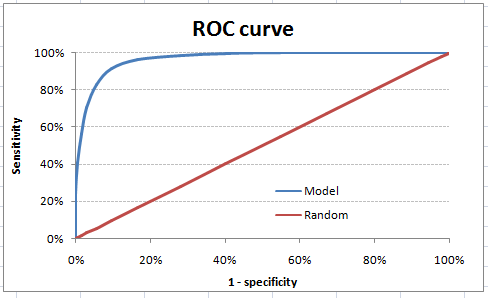

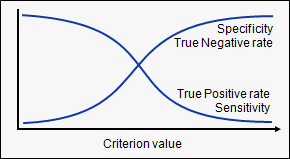

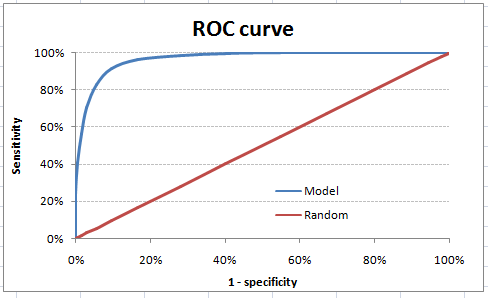

Classification Threshold Classification threshold is the value which is used to classify a new observation as 1 or 0. When we get an output as probabilities and have to classify them into classes, we decide some threshold value and if the probability is above that threshold value we classify it as 1, and 0 otherwise. To find the optimal threshold value, one can plot the AUC-ROC and keep changing the threshold value. The value which will give the maximum AUC will be the optimal threshold value. Clustering

Clustering is an unsupervised learning method used to discover the inherent groupings in the data. For example: Grouping customers on the basis of their purchasing behaviour which is further used to segment the customers. And then the companies can use the appropriate marketing tactics to generate more profits.

Example of clustering algorithms: K-Means, hierarchical clustering, etc.

Computer Vision Computer Vision is a field of computer science that deals with enabling computers to visualize, process and identify images/videos in the same way that a human vision does. In the recent times, the major driving forces behind Computer Vision has been the emergence of deep learning, rise in computational power and a huge amount of image data. The image data can take many forms, such as video sequences, views from multiple cameras, or multi-dimensional data from a medical scanner. Some of the key applications of Computer Vision are:

- Pedestrians, cars, road detection in smart (self-driving) cars

- Object recognition

- Object tracking

- Motion analysis

- Image restoration

Concordant-Discordant Ratio Concordant and discordant pairs are used to describe the relationship between pairs of observations. To calculate the concordant and discordant pairs, the data are treated as ordinal. The number of concordant and discordant pairs are used in calculations for Kendall’s tau, which measures the association between two ordinal variables.

Let’s say you had two movie reviewers rank a set of 5 movies:

Movie Reviewer 1 Reviewer 2 A 1 1 B 2 2 C 3 4 D 4 3 E 5 6

The ranks given by the reviewer 1 are ordered in ascending order, this way we can compare the rankings given by both the reviewers.

Concordant Pair – 2 entities would form a concordant pair if one of them is ranked higher than the other consistently. For example, in the table above B and D form a concordant pair because B has been ranked higher than D by both the reviewers.

Discordant Pair – C and D are discordant because they have been ranked in opposite order by the reviewers.

Concordant Pair or Discordant Pair ratio = (No. of concordant or discordant pairs) / (Total pairs tested)

Confidence Interval A confidence interval is used to estimate what percent of a population fits a category based on the results from a sample population. For example, if 70 adults own a cell phone in a random sample of 100 adults, we can be fairly confident that the true percentage amongst the population is somewhere between 61% and 79%. Confusion Matrix A confusion matrix is a table that is often used to describe the performance of a classification model. It is a N * N matrix, where N is the number of classes. We form confusion matrix between prediction of model classes Vs actual classes. The 2nd quadrant is called type II error or False Negatives, whereas 3rd quadrant is called type I error or False positives

Continuous Variable Continuous variables are those variables which can have infinite number of values but only in a specific range. For example, height is a continuous variable. Convergence Convergence refers to moving towards union or uniformity. An iterative algorithm is said to converge when as the iterations proceed the output gets closer and closer to a specific value. Convex Function A real value function is called convex if the line segment between any two points on the graph of the function lies above or on the graph.

Convex functions play an important role in many areas of mathematics. They are especially important in the study of optimization problems where they are distinguished by a number of convenient properties.

Convex functions play an important role in many areas of mathematics. They are especially important in the study of optimization problems where they are distinguished by a number of convenient properties.

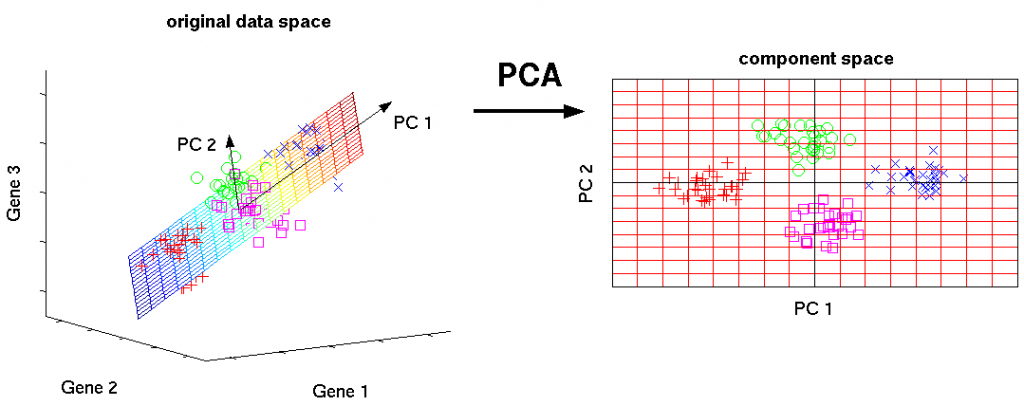

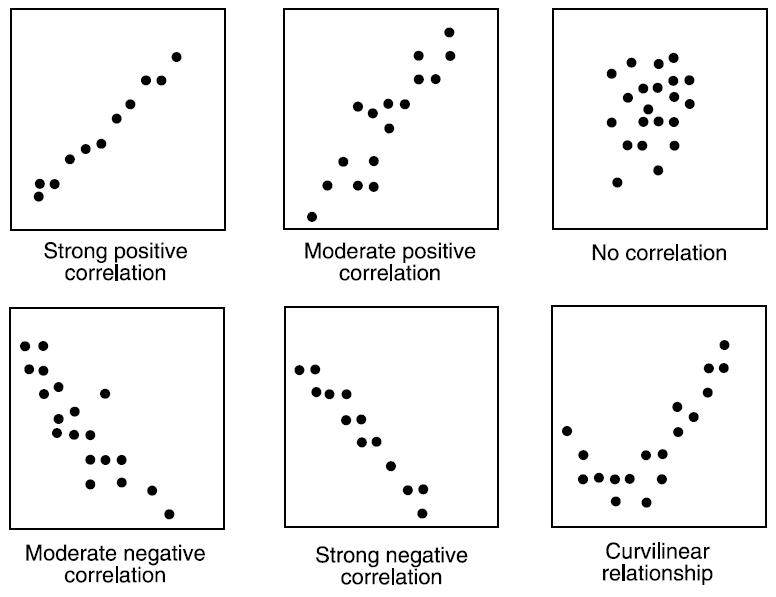

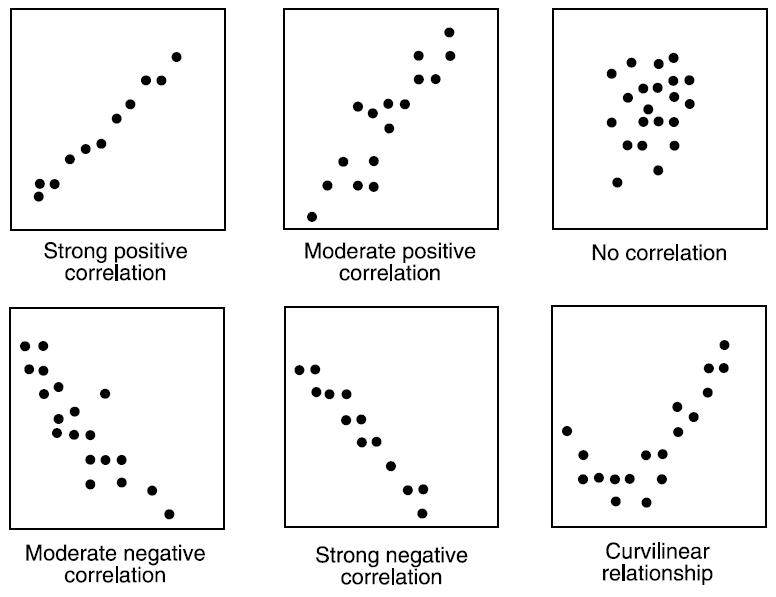

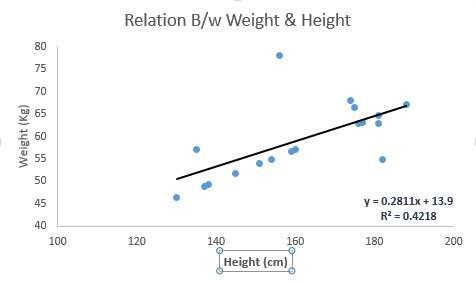

Correlation Correlation is the ratio of covariance of two variables to a product of variance (of the variables). It takes a value between +1 and -1. An extreme value on both the side means they are strongly correlated with each other. A value of zero indicates a NIL correlation but not a non-dependence. You’ll understand this clearly in one of the following answers.

The most widely used correlation coefficient is Pearson Coefficient. Here is the mathematical formula to derive Pearson Coefficient.

Cosine Similarity Cosine Similarity is the cosine of the angle between 2 non-zero vectors. Two parallel vectors have a cosine similarity of 1 and two vectors at 90° have a cosine similarity of 0. Suppose we have two vectors A and B, cosine similarity of these vectors can be calculated by dividing the dot product of A and B with the product of the magnitude of the two vectors.

Cost Function Cost function is used to define and measure the error of the model. The cost function is given by:

Here,

Here,

- h(x) is the prediction

- y is the actual value

- m is the number of rows in the training set

Let us understand it with an example:

So let’s say, you increase the size of a particular shop, where you predicted that the sales would be higher. But despite increasing the size, the sales in that shop did not increase that much. So the cost applied in increasing the size of the shop, gave you negative results. So, we need to minimize these costs. Therefore we make use of cost function to minimize the loss.

Covariance Covariance is a measure of the joint variability of two random variables. It’s similar to variance, but where variance tells you how a single variable varies, co variance tells you how two variables vary together. The formula for covariance is:

Where,

x = the independent variable

y = the dependent variable

n = number of data points in the sample

x bar = the mean of the independent variable x

y bar = the mean of the dependent variable y

A positive covariance means the variables are positively related, while a negative covariance means the variables are inversely related.

Where,

x = the independent variable

y = the dependent variable

n = number of data points in the sample

x bar = the mean of the independent variable x

y bar = the mean of the dependent variable y

A positive covariance means the variables are positively related, while a negative covariance means the variables are inversely related.

Cross Entropy In information theory, the cross entropy between two probability distributions and over the same underlying set of events measures the average number of bits needed to identify an event drawn from the set, if a coding scheme is used that is optimized for an “unnatural” probability distribution , rather than the “true”. Cross entropy can be used to define the loss function in machine learning and optimization. Cross Validation Cross Validation is a technique which involves reserving a particular sample of a dataset which is not used to train the model. Later, the model is tested on this sample to evaluate the performance. There are various methods of performing cross validation such as:

- Leave one out cross validation (LOOCV)

- k-fold cross validation

- Stratified k-fold cross validation

- Adversarial validation

| Categorical Variable | Categorical variables (or nominal variables) are those variables which have discrete qualitative values. For example, names of cities are categorical like Delhi, Mumbai, Kolkata. | ||||||||||||||||||

| Classification | It is supervised learning method where the output variable is a category, such as “Male” or “Female” or “Yes” and “No”.

For example: Classification Algorithms like Logistic Regression, Decision Tree, K-NN, SVM etc.

| ||||||||||||||||||

| Classification Threshold | Classification threshold is the value which is used to classify a new observation as 1 or 0. When we get an output as probabilities and have to classify them into classes, we decide some threshold value and if the probability is above that threshold value we classify it as 1, and 0 otherwise. To find the optimal threshold value, one can plot the AUC-ROC and keep changing the threshold value. The value which will give the maximum AUC will be the optimal threshold value. | ||||||||||||||||||

| Clustering |

Clustering is an unsupervised learning method used to discover the inherent groupings in the data. For example: Grouping customers on the basis of their purchasing behaviour which is further used to segment the customers. And then the companies can use the appropriate marketing tactics to generate more profits.

Example of clustering algorithms: K-Means, hierarchical clustering, etc.

| ||||||||||||||||||

| Computer Vision | Computer Vision is a field of computer science that deals with enabling computers to visualize, process and identify images/videos in the same way that a human vision does. In the recent times, the major driving forces behind Computer Vision has been the emergence of deep learning, rise in computational power and a huge amount of image data. The image data can take many forms, such as video sequences, views from multiple cameras, or multi-dimensional data from a medical scanner. Some of the key applications of Computer Vision are:

| ||||||||||||||||||

| Concordant-Discordant Ratio | Concordant and discordant pairs are used to describe the relationship between pairs of observations. To calculate the concordant and discordant pairs, the data are treated as ordinal. The number of concordant and discordant pairs are used in calculations for Kendall’s tau, which measures the association between two ordinal variables.

Let’s say you had two movie reviewers rank a set of 5 movies:

The ranks given by the reviewer 1 are ordered in ascending order, this way we can compare the rankings given by both the reviewers.

Concordant Pair – 2 entities would form a concordant pair if one of them is ranked higher than the other consistently. For example, in the table above B and D form a concordant pair because B has been ranked higher than D by both the reviewers.

Discordant Pair – C and D are discordant because they have been ranked in opposite order by the reviewers.

Concordant Pair or Discordant Pair ratio = (No. of concordant or discordant pairs) / (Total pairs tested)

| ||||||||||||||||||

| Confidence Interval | A confidence interval is used to estimate what percent of a population fits a category based on the results from a sample population. For example, if 70 adults own a cell phone in a random sample of 100 adults, we can be fairly confident that the true percentage amongst the population is somewhere between 61% and 79%. | ||||||||||||||||||

| Confusion Matrix | A confusion matrix is a table that is often used to describe the performance of a classification model. It is a N * N matrix, where N is the number of classes. We form confusion matrix between prediction of model classes Vs actual classes. The 2nd quadrant is called type II error or False Negatives, whereas 3rd quadrant is called type I error or False positives | ||||||||||||||||||

| Continuous Variable | Continuous variables are those variables which can have infinite number of values but only in a specific range. For example, height is a continuous variable. | ||||||||||||||||||

| Convergence | Convergence refers to moving towards union or uniformity. An iterative algorithm is said to converge when as the iterations proceed the output gets closer and closer to a specific value. | ||||||||||||||||||

| Convex Function | A real value function is called convex if the line segment between any two points on the graph of the function lies above or on the graph.

Convex functions play an important role in many areas of mathematics. They are especially important in the study of optimization problems where they are distinguished by a number of convenient properties.

| ||||||||||||||||||

| Correlation | Correlation is the ratio of covariance of two variables to a product of variance (of the variables). It takes a value between +1 and -1. An extreme value on both the side means they are strongly correlated with each other. A value of zero indicates a NIL correlation but not a non-dependence. You’ll understand this clearly in one of the following answers.

The most widely used correlation coefficient is Pearson Coefficient. Here is the mathematical formula to derive Pearson Coefficient.

| ||||||||||||||||||

| Cosine Similarity | Cosine Similarity is the cosine of the angle between 2 non-zero vectors. Two parallel vectors have a cosine similarity of 1 and two vectors at 90° have a cosine similarity of 0. Suppose we have two vectors A and B, cosine similarity of these vectors can be calculated by dividing the dot product of A and B with the product of the magnitude of the two vectors. | ||||||||||||||||||

| Cost Function | Cost function is used to define and measure the error of the model. The cost function is given by:

Here,

Let us understand it with an example:

So let’s say, you increase the size of a particular shop, where you predicted that the sales would be higher. But despite increasing the size, the sales in that shop did not increase that much. So the cost applied in increasing the size of the shop, gave you negative results. So, we need to minimize these costs. Therefore we make use of cost function to minimize the loss.

| ||||||||||||||||||

| Covariance | Covariance is a measure of the joint variability of two random variables. It’s similar to variance, but where variance tells you how a single variable varies, co variance tells you how two variables vary together. The formula for covariance is:

Where,

x = the independent variable

y = the dependent variable

n = number of data points in the sample

x bar = the mean of the independent variable x

y bar = the mean of the dependent variable y

A positive covariance means the variables are positively related, while a negative covariance means the variables are inversely related.

| ||||||||||||||||||

| Cross Entropy | In information theory, the cross entropy between two probability distributions and over the same underlying set of events measures the average number of bits needed to identify an event drawn from the set, if a coding scheme is used that is optimized for an “unnatural” probability distribution , rather than the “true”. Cross entropy can be used to define the loss function in machine learning and optimization. | ||||||||||||||||||

| Cross Validation | Cross Validation is a technique which involves reserving a particular sample of a dataset which is not used to train the model. Later, the model is tested on this sample to evaluate the performance. There are various methods of performing cross validation such as:

|

D

Word

Description

Data Mining Data mining is a study of extracting useful information from structured/unstructured data taken from various sources. This is done usually for

- Mining for frequent patterns

- Mining for associations

- Mining for correlations

- Mining for clusters

- Mining for predictive analysis

Data Mining is done for purposes like Market Analysis, determining customer purchase pattern, financial planning, fraud detection, etc

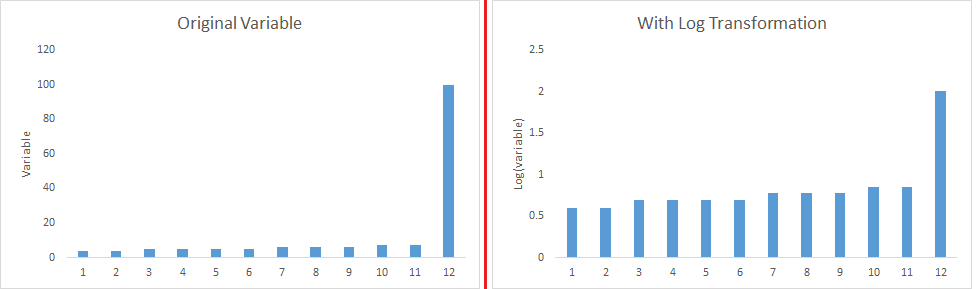

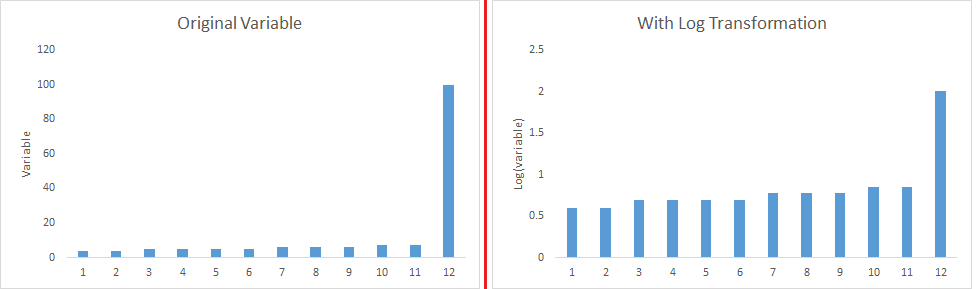

Data Science Data science is a combination of data analysis, algorithmic development and technology in order to solve analytical problems. The main goal is a use of data to generate business value. Data Transformation Data transformation is the process to convert data from one form to the other. This is usually done at a preprocessing step.

For instance, replacing a variable x by the square root of x

X SQUARE_ROOT(X) 1 1 4 2 9 3

Database Database (abbreviated as DB) is an structured collection of data. The collected information is organised in a way such that it is easily accessible by the computer. Databases are built and managed by using database programming languages. The most common database language is SQL. Dataframe DataFrame is a 2-dimensional labeled data structure with columns of potentially different types. You can think of it like a spreadsheet or SQL table, or a dict of Series objects. DataFrame accepts many different kinds of input:

- Dict of 1D ndarrays, lists, dicts, or Series

- 2-D numpy.ndarray

- Structured or record ndarray

- A series

- Another DataFrame

Dataset A dataset (or data set) is a collection of data. A dataset is organized into some type of data structure. In a database, for example, a dataset might contain a collection of business data (names, salaries, contact information, sales figures, and so forth). Several characteristics define a dataset’s structure and properties. These include the number and types of the attributes or variables, and various statistical measures applicable to them, such as standard deviation and kurtosis. Dashboard Dashboard is an information management tool which is used to visually track, analyze and display key performance indicators, metrics and key data points. Dashboards can be customised to fulfil the requirements of a project. It can be used to connect files, attachments, services and APIs which is displayed in the form of tables, line charts, bar charts and gauges. Popular tools for building dashboards include Excel and Tableau. DBScan DBSCAN is the acronym for Density-Based Spatial Clustering of Applications with Noise. It is a clustering algorithm that isolates different density regions by forming clusters. For a given set of points, it groups the points which are closely packed.

The algorithm has two important features:

- distance

- the minimum number of points required to form a dense region

The steps involved in this algorithm are:

- Beginning with an arbitrary starting point it extracts the neighborhood of this point using the distance

- If there are sufficient neighboring points around this point then a cluster is formed

- This point is then marked as visited

- A new unvisited point is retrieved and processed, leading to the discovery of a further cluster or noise

- This process continues until all points are marked as visited

The below image is an example of DBScan on a set of normalized data points:

Decision Boundary In a statistical-classification problem with two or more classes, a decision boundary or decision surface is a hypersurface that partitions the underlying vector space into two or more sets, one for each class. How well the classifier works depends upon how closely the input patterns to be classified resemble the decision boundary. In the example sketched below, the correspondence is very close, and one can anticipate excellent performance.

Here the lines separating each class are decision boundaries.

Here the lines separating each class are decision boundaries.

Decision Tree

Decision tree is a type of supervised learning algorithm (having a pre-defined target variable) that is mostly used in classification problems. It works for both categorical and continuous input & output variables. In this technique, we split the population (or sample) into two or more homogeneous sets (or sub-populations) based on most significant splitter / differentiator in input variables.

Deep Learning Deep Learning is associated with a machine learning algorithm (Artificial Neural Network, ANN) which uses the concept of human brain to facilitate the modeling of arbitrary functions. ANN requires a vast amount of data and this algorithm is highly flexible when it comes to model multiple outputs simultaneously. Descriptive Statistics Descriptive statistics is comprised of those values which explains the spread and central tendency of data. For example, mean is a way to represent central tendency of the data, whereas IQR is a way to represent spread of the data. Dependent Variable A dependent variable is what you measure and which is affected by independent / input variable(s). It is called dependent because it “depends” on the independent variable. For example, let’s say we want to predict the smoking habits of people. Then the person smokes “yes” or “no” is the dependent variable. Decile Decile divides a series into 10 equal parts. For any series, there are 10 decile denoted by D1, D2, D3 … D10. These are known as First Decile , Second Decile and so on.

For example, the diagram below shows the health score of a patient from range 0 to 60. Nine deciles split the patients into 10 groups

Degree of Freedom It is the number of variables that have the choice of having more than one arbitrary value.

For example, in a sample of size 10 with mean 10, 9 values can be arbitrary but the 10th value is forced by the sample mean. So, we can choose any number for 9 values but the 10th value must be such that the mean is 10. So, the degree of freedom in this case will be 9.

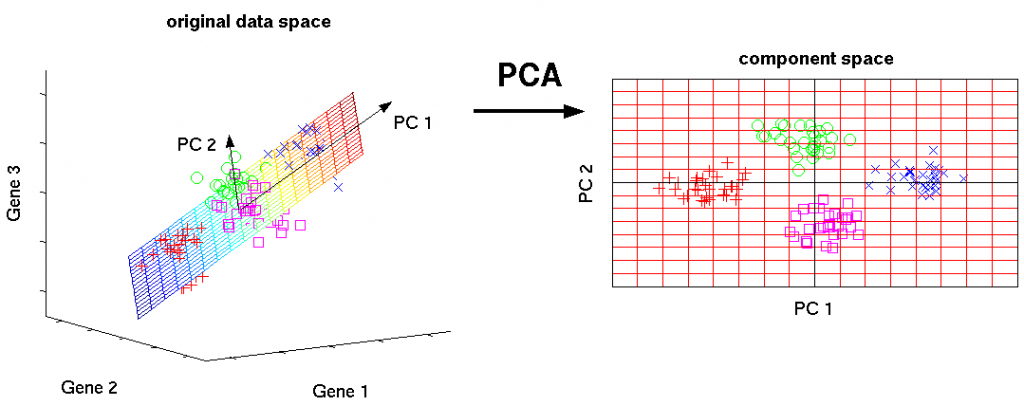

Dimensionality Reduction Dimensionality Reduction is the process of reducing the number of random variables under consideration by obtaining a set of principal variables. Dimension Reduction refers to the process of converting a set of data having vast dimensions into data with lesser dimensions ensuring that it conveys similar information concisely. Some of the benefits of dimensionality reduction:

- It helps in data compressing and reducing the storage space required

- It fastens the time required for performing same computations

- It takes care of multicollinearity that improves the model performance. It removes redundant features

- Reducing the dimensions of data to 2D or 3D may allow us to plot and visualize it precisely

- It is helpful in noise removal also and as result of that we can improve the performance of models

Dplyr Dplyr is a popular data manipulation package in R. It makes data manipulation, cleaning, summarizing very user friendly. Dplyr can work not only with the local datasets, but also with remote database tables, using exactly the same R code.

It can be easily installed using the following code from the R console:

install.packages("dplyr")

Dummy Variable Dummy Variable is another name for Boolean variable. An example of dummy variable is that it takes value 0 or 1. 0 means value is true (i.e. age < 25) and 1 means value is false (i.e. age >= 25)

| Data Mining | Data mining is a study of extracting useful information from structured/unstructured data taken from various sources. This is done usually for

Data Mining is done for purposes like Market Analysis, determining customer purchase pattern, financial planning, fraud detection, etc

| ||||||||

| Data Science | Data science is a combination of data analysis, algorithmic development and technology in order to solve analytical problems. The main goal is a use of data to generate business value. | ||||||||

| Data Transformation | Data transformation is the process to convert data from one form to the other. This is usually done at a preprocessing step.

For instance, replacing a variable x by the square root of x

| ||||||||

| Database | Database (abbreviated as DB) is an structured collection of data. The collected information is organised in a way such that it is easily accessible by the computer. Databases are built and managed by using database programming languages. The most common database language is SQL. | ||||||||

| Dataframe | DataFrame is a 2-dimensional labeled data structure with columns of potentially different types. You can think of it like a spreadsheet or SQL table, or a dict of Series objects. DataFrame accepts many different kinds of input:

| ||||||||

| Dataset | A dataset (or data set) is a collection of data. A dataset is organized into some type of data structure. In a database, for example, a dataset might contain a collection of business data (names, salaries, contact information, sales figures, and so forth). Several characteristics define a dataset’s structure and properties. These include the number and types of the attributes or variables, and various statistical measures applicable to them, such as standard deviation and kurtosis. | ||||||||

| Dashboard | Dashboard is an information management tool which is used to visually track, analyze and display key performance indicators, metrics and key data points. Dashboards can be customised to fulfil the requirements of a project. It can be used to connect files, attachments, services and APIs which is displayed in the form of tables, line charts, bar charts and gauges. Popular tools for building dashboards include Excel and Tableau. | ||||||||

| DBScan | DBSCAN is the acronym for Density-Based Spatial Clustering of Applications with Noise. It is a clustering algorithm that isolates different density regions by forming clusters. For a given set of points, it groups the points which are closely packed.

The algorithm has two important features:

The steps involved in this algorithm are:

The below image is an example of DBScan on a set of normalized data points:

| ||||||||

| Decision Boundary | In a statistical-classification problem with two or more classes, a decision boundary or decision surface is a hypersurface that partitions the underlying vector space into two or more sets, one for each class. How well the classifier works depends upon how closely the input patterns to be classified resemble the decision boundary. In the example sketched below, the correspondence is very close, and one can anticipate excellent performance.

Here the lines separating each class are decision boundaries.

| ||||||||

| Decision Tree |

Decision tree is a type of supervised learning algorithm (having a pre-defined target variable) that is mostly used in classification problems. It works for both categorical and continuous input & output variables. In this technique, we split the population (or sample) into two or more homogeneous sets (or sub-populations) based on most significant splitter / differentiator in input variables.

| ||||||||

| Deep Learning | Deep Learning is associated with a machine learning algorithm (Artificial Neural Network, ANN) which uses the concept of human brain to facilitate the modeling of arbitrary functions. ANN requires a vast amount of data and this algorithm is highly flexible when it comes to model multiple outputs simultaneously. | ||||||||

| Descriptive Statistics | Descriptive statistics is comprised of those values which explains the spread and central tendency of data. For example, mean is a way to represent central tendency of the data, whereas IQR is a way to represent spread of the data. | ||||||||

| Dependent Variable | A dependent variable is what you measure and which is affected by independent / input variable(s). It is called dependent because it “depends” on the independent variable. For example, let’s say we want to predict the smoking habits of people. Then the person smokes “yes” or “no” is the dependent variable. | ||||||||

| Decile | Decile divides a series into 10 equal parts. For any series, there are 10 decile denoted by D1, D2, D3 … D10. These are known as First Decile , Second Decile and so on.

For example, the diagram below shows the health score of a patient from range 0 to 60. Nine deciles split the patients into 10 groups

| ||||||||

| Degree of Freedom | It is the number of variables that have the choice of having more than one arbitrary value.

For example, in a sample of size 10 with mean 10, 9 values can be arbitrary but the 10th value is forced by the sample mean. So, we can choose any number for 9 values but the 10th value must be such that the mean is 10. So, the degree of freedom in this case will be 9.

| ||||||||

| Dimensionality Reduction | Dimensionality Reduction is the process of reducing the number of random variables under consideration by obtaining a set of principal variables. Dimension Reduction refers to the process of converting a set of data having vast dimensions into data with lesser dimensions ensuring that it conveys similar information concisely. Some of the benefits of dimensionality reduction:

| ||||||||

| Dplyr | Dplyr is a popular data manipulation package in R. It makes data manipulation, cleaning, summarizing very user friendly. Dplyr can work not only with the local datasets, but also with remote database tables, using exactly the same R code.

It can be easily installed using the following code from the R console:

install.packages("dplyr") | ||||||||

| Dummy Variable | Dummy Variable is another name for Boolean variable. An example of dummy variable is that it takes value 0 or 1. 0 means value is true (i.e. age < 25) and 1 means value is false (i.e. age >= 25) |

E

Word

Description

Early Stopping Early stopping is a technique for avoiding overfitting when training a machine learning model with iterative method. We set the early stopping in such a way that when the performance has stopped improving on the held-out validation set, the model training stops.

For example, in XGBoost, as you train more and more trees, you will overfit your training dataset. Early stopping enables you to specify a validation dataset and the number of iterations after which the algorithm should stop if the score on your validation dataset didn’t increase.

EDA EDA or exploratory data analysis is a phase used for data science pipeline in which the focus is to understand insights of the data through visualization or by statistical analysis.

The steps involved in EDA are:

- Variable IdentificationIn this step, we identify the data type and category of variables

- Univariate analysis

- Multivariate analysis

ETL ETL is the acronym for Extract, Transform and Load. An ETL system has the following properties:

- It extracts data from the source systems

- It enforces data quality and consistency standards

- Delivers data in a presentation-ready format

This data can be used by application developers to build applications and end users for making decisions.

Evaluation Metrics` The purpose of evaluation metric is to measure the quality of the statistical / machine learning model. For example, below are a few evaluation metrics

- AUC

- ROC score

- F-Score

- Log-Loss

| Early Stopping | Early stopping is a technique for avoiding overfitting when training a machine learning model with iterative method. We set the early stopping in such a way that when the performance has stopped improving on the held-out validation set, the model training stops.

For example, in XGBoost, as you train more and more trees, you will overfit your training dataset. Early stopping enables you to specify a validation dataset and the number of iterations after which the algorithm should stop if the score on your validation dataset didn’t increase.

|

| EDA | EDA or exploratory data analysis is a phase used for data science pipeline in which the focus is to understand insights of the data through visualization or by statistical analysis.

The steps involved in EDA are:

|

| ETL | ETL is the acronym for Extract, Transform and Load. An ETL system has the following properties:

This data can be used by application developers to build applications and end users for making decisions.

|

| Evaluation Metrics` | The purpose of evaluation metric is to measure the quality of the statistical / machine learning model. For example, below are a few evaluation metrics

|

F

Word

Description

Factor Analysis Factor analysis is a technique that is used to reduce a large number of variables into fewer numbers of factors. Factor analysis aims to find independent latent variables. Factor analysis also assumes several assumptions:

- There is linear relationship

- There is no multicollinearity

- It includes relevant variables into analysis

- There is true correlation between variables and factors

There are different types of methods used to extract the factor from the data set:

- Principal Component Analysis

- Common factor analysis

- Image factoring

- Maximum likelihood method

False Negative Points which are actually true but are incorrectly predicted as false. For example, if the problem is to predict the loan status. (Y-loan approved, N-loan not approved). False negative in this case will be the samples for which loan was approved but the model predicted the status as not approved. False Positive Points which are actually false but are incorrectly predicted as true. For example, if the problem is to predict the loan status. (Y-loan approved, N-loan not approved). False positive in this case will be the samples for which loan was not approved but the model predicted the status as approved. Feature Hashing It is a method to transform features to vector. Without looking up the indices in an associative array, it applies a hash function to the features and uses their hash values as indices directly. Simple example of feature hashing:

Suppose we have three documents:

- John likes to watch movies.

- Mary likes movies too.

- John also likes football.

Now we can convert this to vector using hashing.

Term Index John 1 likes 2 to 3 watch 4 movies 5 Mary 6 too 7 also 8 football 9

The array form for the same will be:

Feature Reduction Feature reduction is the process of reducing the number of features to work on a computation intensive task without losing a lot of information.

PCA is one of the most popular feature reduction techniques, where we combine correlated variables to reduce the features.

Feature Selection Feature Selection is a process of choosing those features which are required to explain the predictive power of a statistical model and dropping out irrelevant features.

This can be done by either filtering out less useful features or by combining features to make a new one.

Few-shot Learning Few-shot learning refers to the training of machine learning algorithms using a very small set of training data instead of a very large set. This is most suitable in the field of computer vision, where it is desirable to have an object categorization model work well without thousands of training examples. Flume Flume is a service designed for streaming logs into the Hadoop environment. It can collect and aggregate huge amounts of log data from a variety of sources. In order to collect high volume of data, multiple flume agents can be configured.

Here are the major features of Apache Flume:

- Flume is a flexible tool as it allows to scale in environments with as low as five machines to as high as several thousands of machines

- Apache Flume provides high throughput and low latency

- Apache Flume has a declarative configuration but provides ease of extensibility

- Flume in Hadoop is fault tolerant, linearly scalable and stream oriented

Frequentist Statistics

Frequentist Statistics tests whether an event (hypothesis) occurs or not. It calculates the probability of an event in the long run of the experiment (i.e the experiment is repeated under the same conditions to obtain the outcome).

Here, the sampling distributions of fixed size are taken. Then, the experiment is theoretically repeated infinite number of times but practically done with a stopping intention. For example, I perform an experiment with a stopping intention in mind that I will stop the experiment when it is repeated 1000 times or I see minimum 300 heads in a coin toss.

F-Score F-score evaluation metric combines both precision and recall as a measure of effectiveness of classification. It is calculated in terms of ratio of weighted importance on either recall or precision as determined by β coefficient.

F measure = 2 x (Recall × Precision) / ( β² × Recall + Precision )

| Factor Analysis | Factor analysis is a technique that is used to reduce a large number of variables into fewer numbers of factors. Factor analysis aims to find independent latent variables. Factor analysis also assumes several assumptions:

There are different types of methods used to extract the factor from the data set:

| ||||||||||||||||||||

| False Negative | Points which are actually true but are incorrectly predicted as false. For example, if the problem is to predict the loan status. (Y-loan approved, N-loan not approved). False negative in this case will be the samples for which loan was approved but the model predicted the status as not approved. | ||||||||||||||||||||

| False Positive | Points which are actually false but are incorrectly predicted as true. For example, if the problem is to predict the loan status. (Y-loan approved, N-loan not approved). False positive in this case will be the samples for which loan was not approved but the model predicted the status as approved. | ||||||||||||||||||||

| Feature Hashing | It is a method to transform features to vector. Without looking up the indices in an associative array, it applies a hash function to the features and uses their hash values as indices directly. Simple example of feature hashing:

Suppose we have three documents:

Now we can convert this to vector using hashing.

The array form for the same will be:

| ||||||||||||||||||||

| Feature Reduction | Feature reduction is the process of reducing the number of features to work on a computation intensive task without losing a lot of information.

PCA is one of the most popular feature reduction techniques, where we combine correlated variables to reduce the features.

| ||||||||||||||||||||

| Feature Selection | Feature Selection is a process of choosing those features which are required to explain the predictive power of a statistical model and dropping out irrelevant features.

This can be done by either filtering out less useful features or by combining features to make a new one.

| ||||||||||||||||||||

| Few-shot Learning | Few-shot learning refers to the training of machine learning algorithms using a very small set of training data instead of a very large set. This is most suitable in the field of computer vision, where it is desirable to have an object categorization model work well without thousands of training examples. | ||||||||||||||||||||

| Flume | Flume is a service designed for streaming logs into the Hadoop environment. It can collect and aggregate huge amounts of log data from a variety of sources. In order to collect high volume of data, multiple flume agents can be configured.

Here are the major features of Apache Flume:

| ||||||||||||||||||||

| Frequentist Statistics |

Frequentist Statistics tests whether an event (hypothesis) occurs or not. It calculates the probability of an event in the long run of the experiment (i.e the experiment is repeated under the same conditions to obtain the outcome).

Here, the sampling distributions of fixed size are taken. Then, the experiment is theoretically repeated infinite number of times but practically done with a stopping intention. For example, I perform an experiment with a stopping intention in mind that I will stop the experiment when it is repeated 1000 times or I see minimum 300 heads in a coin toss.

| ||||||||||||||||||||

| F-Score | F-score evaluation metric combines both precision and recall as a measure of effectiveness of classification. It is calculated in terms of ratio of weighted importance on either recall or precision as determined by β coefficient.

F measure = 2 x (Recall × Precision) / ( β² × Recall + Precision )

|

G

Word

Description

Gated Recurrent Unit (GRU) The GRU is a variant of the LSTM (Long Short Term Memory) and was introduced by K. Cho. It retains the LSTM’s resistance to the vanishing gradient problem, but because of its simpler internal structure it is faster to train.

Instead of the input, forget, and output gates in the LSTM cell, the GRU cell has only two gates, an update gate z, and a reset gate r. The update gate defines how much previous memory to keep, and the reset gate defines how to combine the new input with the previous memory.

Ggplot2 GGplot2 is a data visualization package for the R programming language. It is a highly versatile and user-friendly tool for creating attractive plots.

It can be easily installed using the following code from the R console:

install.packages("ggplot2")

Go Go is an open source programming language that makes it easy to build simple, reliable, and efficient software. Go is a statically typed language in the tradition of C.

The main features of Go are:

- Memory safety

- Garbage collection

- Structural typing

The compiler and other tools originally developed by Google are all free and open source.

Goodness of Fit The goodness of fit of a model describes how well it fits a set of observations. Measures of goodness of fit typically summarize the discrepancy between observed values and the values expected under the model.

With regard to a machine learning algorithm, a good fit is when the error for the model on the training data as well as the test data is minimum. Over time, as the algorithm learns, the error for the model on the training data goes down and so does the error on the test dataset. If we train for too long, the performance on the training dataset may continue to decrease because the model is overfitting and learning the irrelevant detail and noise in the training dataset. At the same time the error for the test set starts to rise again as the model’s ability to generalize decreases.

So the point just before the error on the test dataset starts to increase where the model has good skill on both the training dataset and the unseen test dataset is known as the good fit of the model.

Gradient Descent Gradient descent is a first-order iterative optimization algorithm for finding the minimum of a function. In machine learning algorithms, we use gradient descent to minimize the cost function. It find out the best set of parameters for our algorithm. Gradient Descent can be classified as follows:

- On the basis of data ingestion:

- Full Batch Gradient Descent Algorithm

- Stochastic Gradient Descent Algorithm

In full batch gradient descent algorithms, we use whole data at once to compute the gradient, whereas in stochastic we take a sample while computing the gradient.

- On the basis of differentiation techniques:

- First order Differentiation

- Second order Differentiation

| Gated Recurrent Unit (GRU) | The GRU is a variant of the LSTM (Long Short Term Memory) and was introduced by K. Cho. It retains the LSTM’s resistance to the vanishing gradient problem, but because of its simpler internal structure it is faster to train.

Instead of the input, forget, and output gates in the LSTM cell, the GRU cell has only two gates, an update gate z, and a reset gate r. The update gate defines how much previous memory to keep, and the reset gate defines how to combine the new input with the previous memory.

|

| Ggplot2 | GGplot2 is a data visualization package for the R programming language. It is a highly versatile and user-friendly tool for creating attractive plots.

It can be easily installed using the following code from the R console:

install.packages("ggplot2") |

| Go | Go is an open source programming language that makes it easy to build simple, reliable, and efficient software. Go is a statically typed language in the tradition of C.

The main features of Go are:

The compiler and other tools originally developed by Google are all free and open source.

|

| Goodness of Fit | The goodness of fit of a model describes how well it fits a set of observations. Measures of goodness of fit typically summarize the discrepancy between observed values and the values expected under the model.

With regard to a machine learning algorithm, a good fit is when the error for the model on the training data as well as the test data is minimum. Over time, as the algorithm learns, the error for the model on the training data goes down and so does the error on the test dataset. If we train for too long, the performance on the training dataset may continue to decrease because the model is overfitting and learning the irrelevant detail and noise in the training dataset. At the same time the error for the test set starts to rise again as the model’s ability to generalize decreases.

So the point just before the error on the test dataset starts to increase where the model has good skill on both the training dataset and the unseen test dataset is known as the good fit of the model.

|

| Gradient Descent | Gradient descent is a first-order iterative optimization algorithm for finding the minimum of a function. In machine learning algorithms, we use gradient descent to minimize the cost function. It find out the best set of parameters for our algorithm. Gradient Descent can be classified as follows:

In full batch gradient descent algorithms, we use whole data at once to compute the gradient, whereas in stochastic we take a sample while computing the gradient.

|

H

Word

Description

Hadoop Hadoop is an open source distributed processing framework used when we have to deal with enormous data. It allows us to use parallel processing capability to handle big data. Here are some significant benefits of Hadoop:

- Hadoop clusters work and keeps multiple copies to ensure reliability of data. A maximum of 4500 machines can be connected together using Hadoop

- The whole process is broken down into pieces and executed in parallel, hence saving time. A maximum of 25 Petabyte (1 PB = 1000 TB) data can be processed using Hadoop

- In case of a long query, Hadoop builds back up data-sets at every level. It also executes query on duplicate datasets to avoid process loss in case of individual failure. These steps makes Hadoop processing more precise and accurate

- Queries in Hadoop are as simple as coding in any language. You just need to change the way of thinking around building a query to enable parallel processing

Hidden Markov Model Hidden Markov Process is a Markov process in which the states are invisible or hidden, and the model developed to estimate these hidden states is known as the Hidden Markov Model (HMM). However, the output (data) dependent on the hidden states is visible. This output data generated by HMM gives some cue about the sequence of states.

HMM are widely used for pattern recognition in speech recognition, part-of-speech tagging, handwriting recognition, and reinforcement learning.

Hierarchical Clustering

Hierarchical clustering, as the name suggests is an algorithm that builds hierarchy of clusters. This algorithm starts with all the data points assigned to a cluster of their own. Then two nearest clusters are merged into the same cluster. In the end, this algorithm terminates when there is only a single cluster left.

The results of hierarchical clustering can be shown using dendrogram. The dendrogram can be interpreted as:

Histogram Histogram is one of the methods for visualizing data distribution of continuous variables. For example, the figure below shows a histogram with age along the x-axis and frequency of the variable (count of passengers) along the y-axis.

Histograms are widely used to determine the skewness of the data. Looking at the tail of the plot, you can find whether the data distribution is left skewed, normal or right skewed.

Histograms are widely used to determine the skewness of the data. Looking at the tail of the plot, you can find whether the data distribution is left skewed, normal or right skewed.

Hive Hive is a data warehouse software project to process structured data in Hadoop. It is built on top of Apache Hadoop for providing data summarization, query and analysis. Hive gives an SQL-like interface to query data stored in various databases and file systems that integrate with Hadoop. Some of the key features of Hive are :

- Indexing to provide acceleration

- Different storage types such as plain text, RDFile, HBase, ORC, and others

- Metadata storage in a relational database management system, significantly reducing the time to perform semantic checks during query execution

- Operating on compressed data stored into the Hadoop ecosystem

Holdout Sample While working on the dataset, a small part of the dataset is not used for training the model instead, it is used to check the performance of the model. This part of the dataset is called the holdout sample.

For instance, if I divide my data in two parts – 7:3 and use the 70% to train the model, and other 30% to check the performance of my model, the 30% data is called the holdout sample.

Holt-Winters Forecasting Holt-Winters is one of the most popular forecasting techniques for time series. The model predicts the future values computing the combined effects of both trend and seasonality. The idea behind Holt’s Winter forecasting is to apply exponential smoothing to the seasonal components in addition to level and trend. Hyperparameter A hyperparameter is a parameter whose value is set before training a machine learning or deep learning model. Different models require different hyperparameters and some require none. Hyperparameters should not be confused with the parameters of the model because the parameters are estimated or learned from the data.

Some keys points about the hyperparameters are:

- They are often used in processes to help estimate model parameters.

- They are often manually set.

- They are often tuned to tweak a model’s performance

Number of trees in a Random Forest, eta in XGBoost, and k in k-nearest neighbours are some examples of hyperparameters.

Hyperplane It is a subspace with one fewer dimensions than its surrounding area. If a space is 3-dimensional then its hyperplane is just a normal 2D plane. In 5 dimensional space, it’s a 4D plane, so on and so forth.

Most of the time it’s basically a normal plane, but in some special cases, like in Support Vector Machines, where classifications are performed with an n-dimensional hyperplane, the n can be quite large.

Hypothesis Simply put, a hypothesis is a possible view or assertion of an analyst about the problem he or she is working upon. It may be true or may not be true.

| Hadoop | Hadoop is an open source distributed processing framework used when we have to deal with enormous data. It allows us to use parallel processing capability to handle big data. Here are some significant benefits of Hadoop:

|

| Hidden Markov Model | Hidden Markov Process is a Markov process in which the states are invisible or hidden, and the model developed to estimate these hidden states is known as the Hidden Markov Model (HMM). However, the output (data) dependent on the hidden states is visible. This output data generated by HMM gives some cue about the sequence of states.

HMM are widely used for pattern recognition in speech recognition, part-of-speech tagging, handwriting recognition, and reinforcement learning.

|

| Hierarchical Clustering |

Hierarchical clustering, as the name suggests is an algorithm that builds hierarchy of clusters. This algorithm starts with all the data points assigned to a cluster of their own. Then two nearest clusters are merged into the same cluster. In the end, this algorithm terminates when there is only a single cluster left.

The results of hierarchical clustering can be shown using dendrogram. The dendrogram can be interpreted as:

|

| Histogram | Histogram is one of the methods for visualizing data distribution of continuous variables. For example, the figure below shows a histogram with age along the x-axis and frequency of the variable (count of passengers) along the y-axis.

Histograms are widely used to determine the skewness of the data. Looking at the tail of the plot, you can find whether the data distribution is left skewed, normal or right skewed.

|

| Hive | Hive is a data warehouse software project to process structured data in Hadoop. It is built on top of Apache Hadoop for providing data summarization, query and analysis. Hive gives an SQL-like interface to query data stored in various databases and file systems that integrate with Hadoop. Some of the key features of Hive are :

|

| Holdout Sample | While working on the dataset, a small part of the dataset is not used for training the model instead, it is used to check the performance of the model. This part of the dataset is called the holdout sample.

For instance, if I divide my data in two parts – 7:3 and use the 70% to train the model, and other 30% to check the performance of my model, the 30% data is called the holdout sample.

|

| Holt-Winters Forecasting | Holt-Winters is one of the most popular forecasting techniques for time series. The model predicts the future values computing the combined effects of both trend and seasonality. The idea behind Holt’s Winter forecasting is to apply exponential smoothing to the seasonal components in addition to level and trend. |

| Hyperparameter | A hyperparameter is a parameter whose value is set before training a machine learning or deep learning model. Different models require different hyperparameters and some require none. Hyperparameters should not be confused with the parameters of the model because the parameters are estimated or learned from the data.

Some keys points about the hyperparameters are:

Number of trees in a Random Forest, eta in XGBoost, and k in k-nearest neighbours are some examples of hyperparameters.

|

| Hyperplane | It is a subspace with one fewer dimensions than its surrounding area. If a space is 3-dimensional then its hyperplane is just a normal 2D plane. In 5 dimensional space, it’s a 4D plane, so on and so forth.

Most of the time it’s basically a normal plane, but in some special cases, like in Support Vector Machines, where classifications are performed with an n-dimensional hyperplane, the n can be quite large.

|

| Hypothesis | Simply put, a hypothesis is a possible view or assertion of an analyst about the problem he or she is working upon. It may be true or may not be true. |

I

Word

Description

Imputation Imputation is a technique used for handling missing values in the data. This is done either by statistical metrics like mean/mode imputation or by machine learning techniques like kNN imputation

For example,

If the data is as below

Name Age Akshay 23 Akshat NA Viraj 40

The second row contains a missing value, so to impute it we use mean of all ages, i.e.

Name Age Akshay 23 Akshat 31.5 Viraj 40

Inferential Statistics In inferential statistics, we try to hypothesize about the population by only looking at a sample of it. For example, before releasing a drug in the market, internal tests are done to check if the drug is viable for release. But here we cannot check with the whole population for viability of the drug, so we do it on a sample which best represents the population. IQR IQR (or interquartile range) is a measure of variability based on dividing the rank-ordered data set into four equal parts. It can be derived by Quartile3 – Quartile1.

Iteration Iteration refers to the number of times an algorithm’s parameters are updated while training a model on a dataset. For example, each iteration of training a neural network takes certain number of training data and updates the weights by using gradient descent or some other weight update rule.

| Imputation | Imputation is a technique used for handling missing values in the data. This is done either by statistical metrics like mean/mode imputation or by machine learning techniques like kNN imputation

For example,

If the data is as below

The second row contains a missing value, so to impute it we use mean of all ages, i.e.

| ||||||||||||||||

| Inferential Statistics | In inferential statistics, we try to hypothesize about the population by only looking at a sample of it. For example, before releasing a drug in the market, internal tests are done to check if the drug is viable for release. But here we cannot check with the whole population for viability of the drug, so we do it on a sample which best represents the population. | ||||||||||||||||

| IQR | IQR (or interquartile range) is a measure of variability based on dividing the rank-ordered data set into four equal parts. It can be derived by Quartile3 – Quartile1. | ||||||||||||||||

| Iteration | Iteration refers to the number of times an algorithm’s parameters are updated while training a model on a dataset. For example, each iteration of training a neural network takes certain number of training data and updates the weights by using gradient descent or some other weight update rule. |

J

Word

Description

Julia Julia is a high-level, high-performance dynamic programming language for numerical computing. Some important features of Julia are:

- Multiple dispatch: providing the ability to define function behavior across many combinations of argument types.

- Good performance, approaching that of statically-compiled languages like C

- Built-in package manager

- Designed for parallelism and distributed computation

- Free and open source

| Julia | Julia is a high-level, high-performance dynamic programming language for numerical computing. Some important features of Julia are:

|

K

Word

Description

K-Means

It is a type of unsupervised algorithm which solves the clustering problem. It is a procedure which follows a simple and easy way to classify a given data set through a certain number of clusters (assume k clusters). Data points inside a cluster are homogeneous and heterogeneous to peer groups.

Keras Keras is a simple, high-level neural network library, written in Python. It is capable of running on top of Tensorflow and Theano. This is done to make design and experiments with Neural Networks easier.

Following are some important features of Keras:

- User friendliness

- Modularity

- Easy extensibility

- Work with Python

kNN

K nearest neighbors is a simple algorithm that stores all available cases and classifies new cases by a majority vote of its k neighbors. The case being assigned to the class is most common amongst its K nearest neighbors measured by a distance function.

These distance functions can be Euclidean, Manhattan, Minkowski and Hamming distance. First three functions are used for continuous function and fourth one (Hamming) for categorical variables. If K = 1, then the case is simply assigned to the class of its nearest neighbor. At times, choosing the value for K can be a challenge while performing KNN modeling.

Kurtosis

Kurtosis is defined as the thickness (or heaviness) of the tails of a given distribution. Depending on the value of kurtosis, it can be classified into the below 3 categories:

- Mesokurtic: The distribution with kurtosis value equal to 3. A random variable which follows a normal distribution has a kurtosis value of 3

- Platykurtic: If the kurtosis is less than 3. In this, the given distribution has thinner tails and a lower peak than a normal distribution

- Leptykurtic: When the kurtosis value is greater than 3. In this, the given distribution has fatter tails and a higher peak than a normal distribution

| K-Means |

It is a type of unsupervised algorithm which solves the clustering problem. It is a procedure which follows a simple and easy way to classify a given data set through a certain number of clusters (assume k clusters). Data points inside a cluster are homogeneous and heterogeneous to peer groups.

|

| Keras | Keras is a simple, high-level neural network library, written in Python. It is capable of running on top of Tensorflow and Theano. This is done to make design and experiments with Neural Networks easier.

Following are some important features of Keras:

|

| kNN |

K nearest neighbors is a simple algorithm that stores all available cases and classifies new cases by a majority vote of its k neighbors. The case being assigned to the class is most common amongst its K nearest neighbors measured by a distance function.

These distance functions can be Euclidean, Manhattan, Minkowski and Hamming distance. First three functions are used for continuous function and fourth one (Hamming) for categorical variables. If K = 1, then the case is simply assigned to the class of its nearest neighbor. At times, choosing the value for K can be a challenge while performing KNN modeling.

|

| Kurtosis |

Kurtosis is defined as the thickness (or heaviness) of the tails of a given distribution. Depending on the value of kurtosis, it can be classified into the below 3 categories:

|

L

Word

Description

Labeled Data A labeled dataset has a meaningful “label”, “class” or “tag” associated with each of its records or rows. For example, labels for a dataset of a set of images might be whether an image contains a cat or a dog.

Labeled data are usually more expensive to obtain than the raw unlabeled data because preparation of the labelled data involves manual labelling every piece of unlabeled data.

Labeled data is required for supervised learning algorithms.

Lasso Regression